硅谷大神解读,Claude官方如何设计系统提示词?

Claude的系统提示 - 聊天机器人不仅仅是模型

https://www.dbreunig.com/2025/05/07/claude-s-system-prompt-chatbots-are-more-than-just-models.html

几天前,Ásgeir Thor Johnson成功让Claude透露了其系统提示。这个提示很好地提醒我们,聊天机器人不仅仅是它们的模型。它们是通过用户反馈和设计不断积累和打磨的工具和指令集。

对于那些不了解的人来说,系统提示是一个(通常)固定的提示,告诉大语言模型(LLM)应该如何回应用户的提示。系统提示有点像LLM的"设置"或"偏好"。它可能描述了回应的语气,定义了可以用来回答用户提示的工具,设置了训练数据中没有的上下文信息等等。

Claude的系统提示非常长。它有16,739个单词,或110kb。相比之下,OpenAI在ChatGPT中使用的o4-mini系统提示有2,218个单词,或15.1kb - 大约是Claude提示长度的13%。

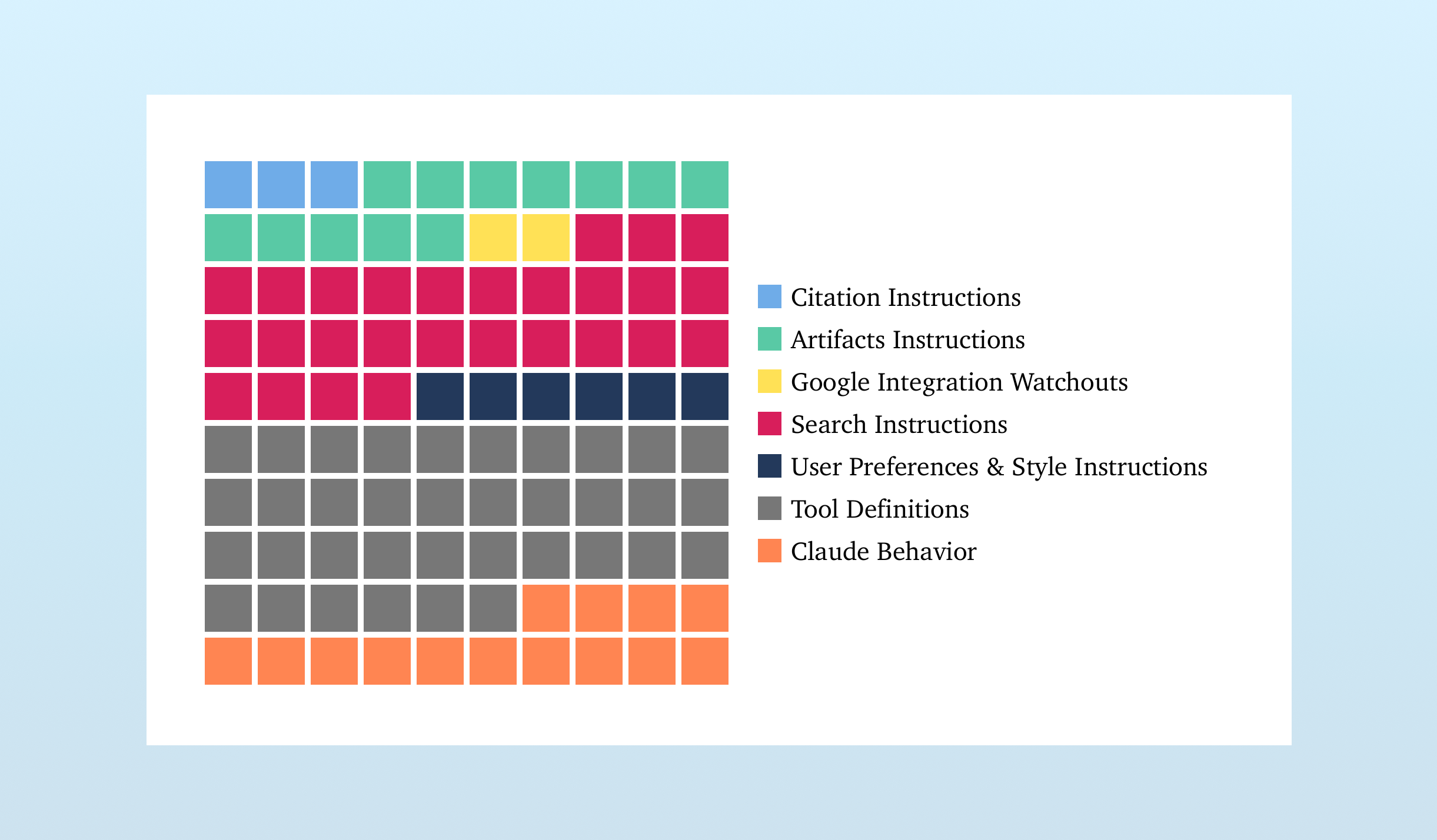

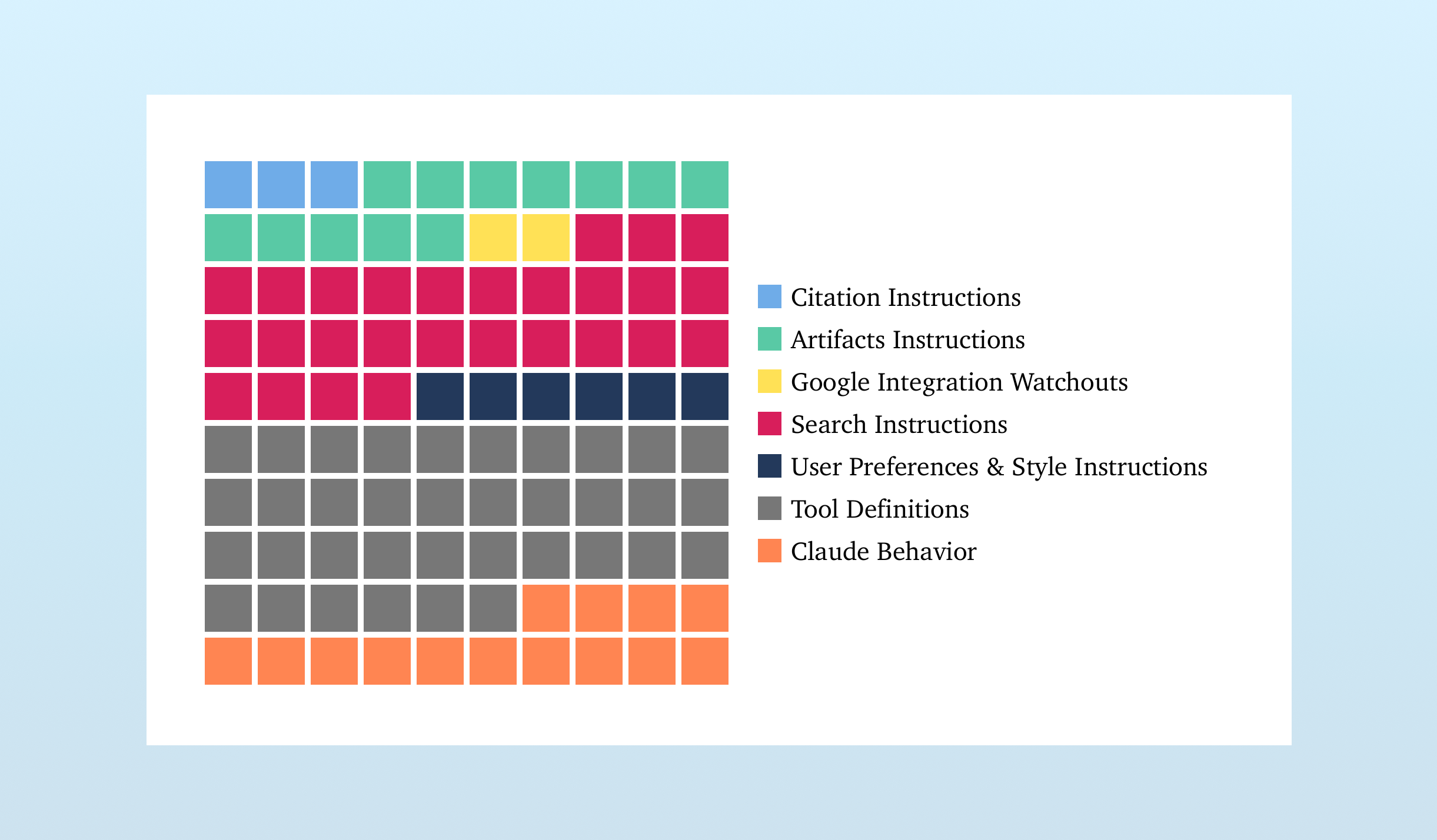

以下是Claude提示的内容:

让我们分解每个部分。

最大的组成部分是工具定义,这部分内容来自MCP服务器。MCP服务器与普通API的不同之处在于,它们向LLM提供关于如何以及何时使用它们的指令。

在这个提示中,有14种不同的工具由MCP详细说明。以下是一个例子:

{

"description": "搜索网络",

"name": "web_search",

"parameters": {

"additionalProperties": false,

"properties": {

"query": {

"description": "搜索查询",

"title": "查询",

"type": "string"

}

},

"required": ["query"],

"title": "BraveSearchParams",

"type": "object"

}

}这个例子很简单,有一个非常短的"description"字段。例如,Google Drive搜索工具的description字段超过1,700个单词。它可以变得非常复杂。

在工具定义部分之外,还有更多关于工具使用的指令 - 引用指令、工件指令、搜索指令和Google集成注意事项都详细说明了在聊天机器人交互的上下文中应该如何_使用_这些工具。例如,有_重复_的注释提醒Claude不要对已经知道的话题使用搜索工具。(你可以感觉到这是一个难以消除的行为!)

事实上,在整个提示中,有一些部分感觉像是热修复。Google集成注意事项部分(这是我标注的;它没有任何XML分隔或组织)只是5行没有任何结构的文字。每一行似乎都是为了调整理想行为。例如:

如果你正在使用任何gmail工具,并且用户指示你查找特定人员的邮件,不要假设该人员的电子邮件。由于一些员工和同事共享名字,不要假设用户所指的人与你有偶然看到的共享该同事名字的人(例如通过之前的邮件或日历搜索)有相同的电子邮件。相反,你可以用名字搜索用户的邮件,然后询问用户确认返回的邮件中是否有他们同事的正确电子邮件。总的来说,这个提示中近80%的内容与工具有关 - 如何使用它们以及何时使用它们。

我立刻想到的问题是:"为什么在MCP提供的部分之外有这么多工具指令?"(上面的灰色框。)仔细研究后,我认为这只是关注点分离。MCP详细信息包含与使用给定工具的任何程序相关的信息,而提示的非MCP部分提供了_仅特定于聊天机器人应用程序_的细节,允许MCP被各种不同的应用程序使用而无需修改。这是标准的程序设计,应用于提示。

在提示的最后,我们进入了我称之为Claude行为的部分。这部分详细说明了Claude应该如何行为,如何响应用户请求,并规定了它应该和_不应该_做什么。直接阅读它让人想起Radiohead的"Fitter, Happier"。这是大多数人在想到系统提示时会想到的内容。

但这里也有明显的热修复。有许多行显然是针对常见的LLM"陷阱"编写的,例如:

- "如果要求Claude计算单词、字母和字符,它在回答之前会逐步思考。它通过为每个单词、字母或字符分配一个数字来明确计数。只有在执行了这个明确的计数步骤后,它才会回答。" 这是对"单词'Raspberry'中有多少个R?"这类问题的防范。

- "如果向Claude展示一个经典谜题,在继续之前,它会逐字引用用户消息中的每一个约束或前提,放在引号内,以确认它不是在处理一个新变体。" 一个常见的挫败LLM的方法是稍微改变一个常见的逻辑谜题。LLM会将其上下文匹配到更常见的变体,而忽略了修改。

- "唐纳德·特朗普是美国现任总统,于2025年1月20日就职。" 根据这个提示,Claude的知识截止日期是2024年10月,所以它不会知道这个事实。

但我最喜欢的注释是这个:"如果被要求写诗,Claude避免使用陈腐的意象或隐喻或可预测的押韵方案。"

阅读这个提示时,我想知道Anthropic是如何管理这个的。提示的一个讽刺之处在于,虽然任何人都可以阅读它们,但它们很难扫描,通常缺乏结构。Anthropic大量使用XML风格的标签来缓解这种性质(人们不得不怀疑这些标签对人类编辑提示更有用还是对LLM更有用...),他们的MCP发明和采用显然是一个资产。

但是他们使用什么软件来版本控制这个?热修复比比皆是 - 这些是一个一个地加入的,还是在一批评估中批量加入的?最后:什么时候你会清空一切,从头开始?你会有这一天吗?

像这样的提示很好地提醒我们,聊天机器人不仅仅是一个模型,我们正在学习如何管理提示。

更新:Ásgeir Thor Johnson来信说他已经发布了系统提示的新版本,清理它以使其更"人类可读":

我上传了许多文件,试图使其变得'人类可读',但这个文件是最接近原始文件的。它可能对高级用户更有用,我还在文件顶部作为注释包含了一些关于如何复制的解释和各种元信息。

让Claude写出提示时有各种复杂情况。决定分享提示的最佳方式相当头疼,这就是为什么我的仓库中有许多包含相同系统提示的文件。所有这些信息都在这个新文件中详细说明。

A couple days ago, Ásgeir Thor Johnson convinced Claude to give up its system prompt. The prompt is a good reminder that chatbots are more than just their model. They’re tools and instructions that accrue and are honed, through user feedback and design.

For those who don’t know, a system prompt is a (generally) constant prompt that tells an LLM how it should reply to a user’s prompt. A system prompt is kind of like the “settings” or “preferences” for an LLM. It might describe the tone it should respond with, define tools it can use to answer the user’s prompt, set contextual information not in the training data, and more.

Claude’s system prompt is long. It’s 16,739 words, or 110kb. For comparison, the system prompt for OpenAI’s o4-mini in ChatGPT is 2,218 words long, or 15.1kb – ~13% the length of Claude’s.

Here’s what’s in Claude’s prompt:

Let’s break down each component.

The biggest component, the Tool Definitions, is populated by information from MCP servers. MCP servers differ from your bog-standard APIs in that they provide instructions to the LLMs detailing how and when to use them.

In this prompt, there are 14 different tools detailed by MCPs. Here’s an example of one:

{

"description": "Search the web",

"name": "web_search",

"parameters": {

"additionalProperties": false,

"properties": {

"query": {

"description": "Search query",

"title": "Query",

"type": "string"

}

},

"required": ["query"],

"title": "BraveSearchParams",

"type": "object"

}

}This example is simple and has a very short “description” field. The Google Drive search tool, for example, has a description over 1,700 words long. It can get complex.

Outside the Tool Definition section, there are plenty more tool use instructions – the Citation Instructions, Artifacts Instructions, Search Instructions, and Google Integration Watchouts all detail how these tools should be used within the context of a chatbot interaction. For example, there are repeated notes reminding Claude not to use the search tool for topics it already knows about. (You get the sense this is/was a difficult behavior to eliminate!)

In fact, throughout this prompt are bits and pieces that feel like hotfixes. The Google Integration Watchouts section (which I am labeling; it lacks any XML delineation or organization) is just 5 lines dropped in without any structure. Each line seems designed to dial in ideal behavior. For example:

If you are using any gmail tools and the user has instructed you to find messages for a particular person, do NOT assume that person's email. Since some employees and colleagues share first names, DO NOT assume the person who the user is referring to shares the same email as someone who shares that colleague's first name that you may have seen incidentally (e.g. through a previous email or calendar search). Instead, you can search the user's email with the first name and then ask the user to confirm if any of the returned emails are the correct emails for their colleagues.All in, nearly 80% of this prompt pertains to tools – how to use them and when to use them.

My immediate question, after realizing this, was, “Why are there so many tool instructions outside the MCP-provided section?” (The gray boxes above.) Pouring over this, I’m of the mind that it’s just separation of concerns. The MCP details contain information relevant to any program using a given tool, while the non-MCP bits of the prompt provide details specific only to the chatbot application, allowing the MCPs to be used by a host of different applications without modification. It’s standard program design, applied to prompting.

At the end of the prompt, we enter what I call the Claude Behavior section. This part details how Claude should behave, respond to user requests, and prescribes what it should and shouldn’t do. Reading it straight through evokes Radiohead’s “Fitter, Happier.” It’s what most people think of when they think of system prompts.

But hot fixes are apparent here as well. There are many lines clearly written to foil common LLM “gotchas”, like:

- “If Claude is asked to count words, letters, and characters, it thinks step by step before answering the person. It explicitly counts the words, letters, or characters by assigning a number to each. It only answers the person once it has performed this explicit counting step.” This is a hedge against the, “How many R’s are in the word, ‘Raspberry’?” question and similar stumpers.

- “If Claude is shown a classic puzzle, before proceeding, it quotes every constraint or premise from the person’s message word for word before inside quotation marks to confirm it’s not dealing with a new variant.” A common way to foil LLMs is to slightly change a common logic puzzle. The LLM will match it contextually to the more common variant and miss the edit.

- “Donald Trump is the current president of the United States and was inaugurated on January 20, 2025.” According to this prompt, Claude’s knowledge cutoff is October 2024, so it wouldn’t know this fact.

But my favorite note is this one: “If asked to write poetry, Claude avoids using hackneyed imagery or metaphors or predictable rhyming schemes.”

Reading through the prompt, I wonder how this is managed at Anthropic. An irony of prompts is that while they’re readable by anyone, they’re difficult to scan and usually lack structure. Anthropic makes heavy use of XML-style tags to mitigate this nature (one has to wonder if these are more useful for the humans editing the prompt or the LLM…) and their MCP invention and adoption is clearly an asset.

But what software are they using to version this? Hotfixes abound – are these dropped in one-by-one, or are they batched in bursts of evaluations? Finally: at what point do you wipe the slate clean and start with a blank page? Do you ever?

A prompt like this is a good reminder that chatbots are much more than just a model and we’re learning how to manage prompts as we go.

Update: Ásgeir Thor Johnson writes in to say he’s posted a new version of the system prompt, cleaning it up to make it more, “human-readable”:

I uploaded many files to try and make it kind of ‘human-readable,’ but this file is the one closest to the original. It’s probably more useful for power users, and I also include in it, as a comment at the top, some explanations on how to reproduce and all kinds of meta info.

There are various complications when getting Claude to write out the prompt. Deciding the best way to share the prompt has been quite a headache, which is the reason I have many files with the same system prompt in my repo. All of the info about this is in this new file in detail.

文章标题:硅谷大神解读,Claude官方如何设计系统提示词?

文章链接:https://www.qimuai.cn/?post=111

本站文章均为原创,未经授权请勿用于任何商业用途